For the last couple of weeks, I have spent several hours every few days learning to use Runway's Alpha-3 model as I explore how it can convert text prompts into moving images. I haven't watched any tutorials or conducted any research; I just jumped in, like we did during the COVID lockdowns, and taught myself Adobe Premiere and After Effects. This is how I learn best (with an attitude of relative cavalierness), by jumping in and doing.

However, this was different. I am a creator at heart, and I have spent my career building commercial brand identities, spaces, and experiences with an artistic hand and mouse clicks. So, the jump to language as the primary medium of creative expression in art has not been customary for me. I typically do not describe things with just words, and so it has been difficult for me to prompt and to get clarity and economy of language from someone who tends to talk around an idea.

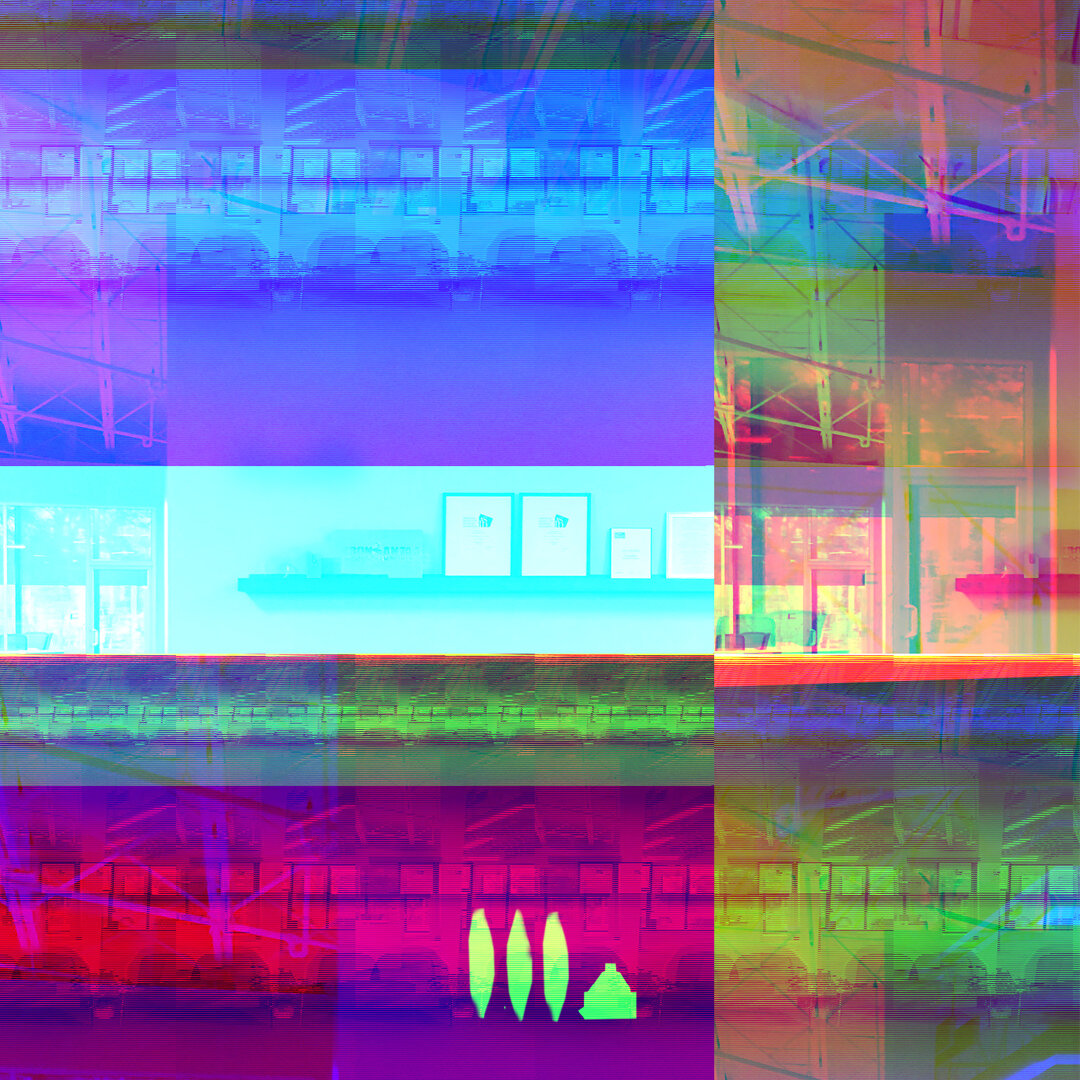

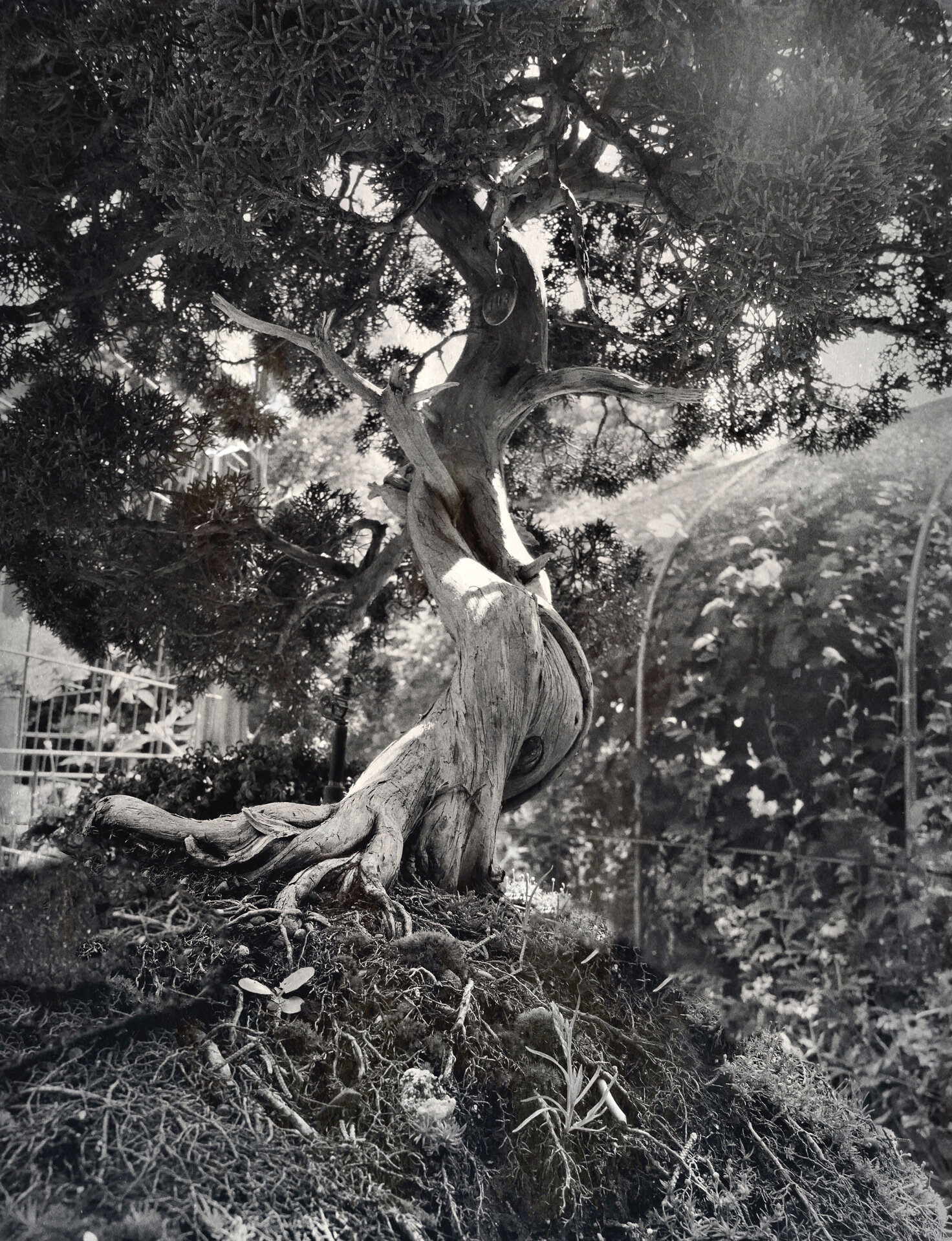

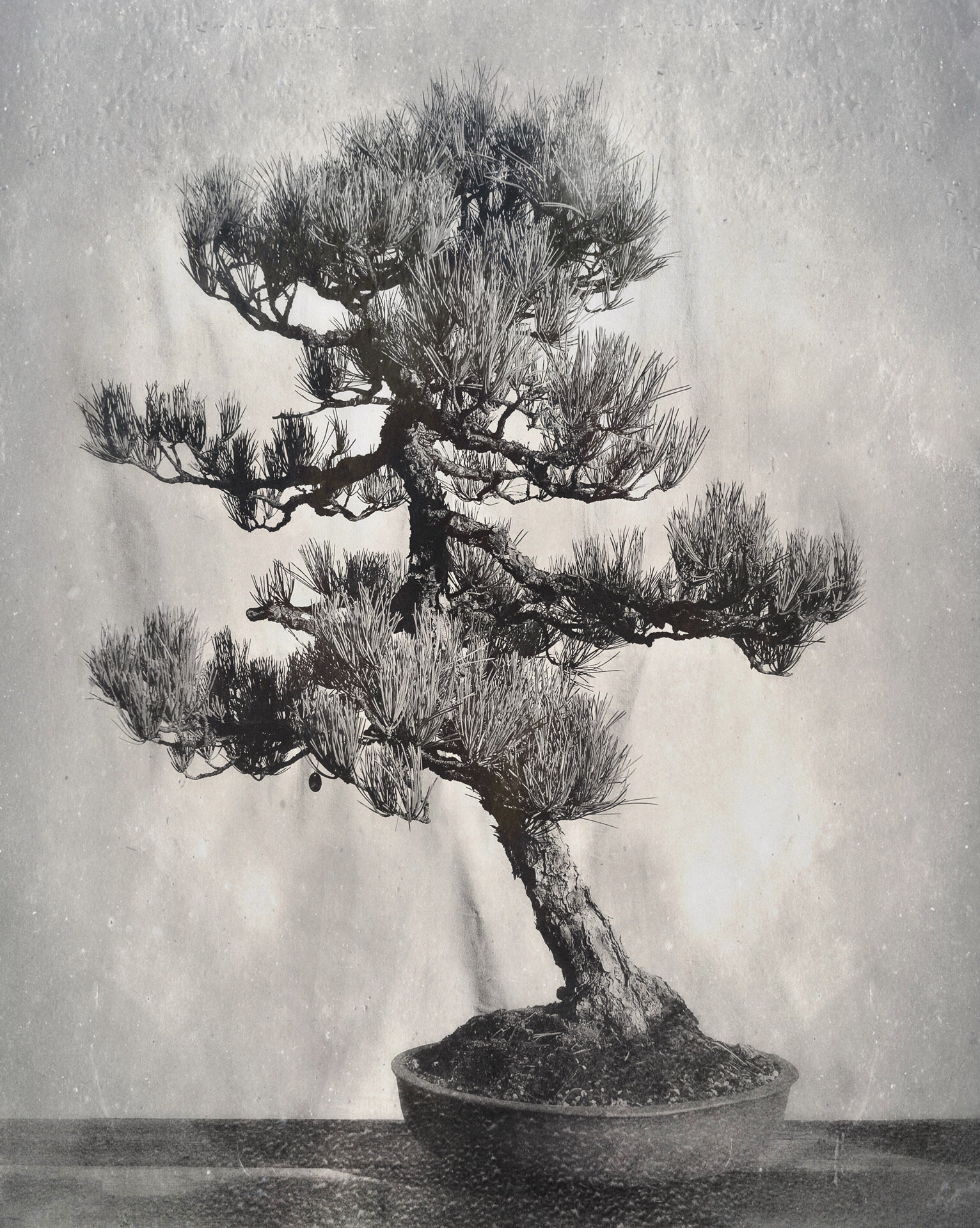

Every effort has sparked some epiphanies and some frustrations, sometimes productive moments that were entirely unexpected. I found it beneficial to use a base image to control general composition and reference, but it might have also generalized the prompt and therefore was useless. It was challenging to make minor changes to details, as the AI either ignored what I wanted or expressed one detail differently than what I intended. And when I attempted to ask for fast movement or slow movement or shift, or complex movement, I was surprised by producing less real, or more stylized, or cartoon-like. From my experience, the strongest iterations of animations were when I sent a text prompt with slow to medium rates of time progress, or movement, that also included some of the suggestions for internal rhythms.

Human motion is one of the huge challenges for me, because AI cannot generate humans that move fluidly. I still see one-off extra limbs, hands disappearing, joints going the wrong direction, etc. I feel as though we are trying to make a video with a dancer who has not mastered the choreography.

I am in no particular rush. I want to generate imagery that does not "read" normal AI immediately. Something with a little more nuance. A little more tied to human concepts of meaning. Hopefully, with patience, I will produce some imagery that will be used commercially and personally. I will continue to figure out the methodology and remain unaffected by experimentation and learning. It is just another medium to add to the reservoir of approaches. Just another language to learn. Just another way to see.